Keeping An Eye On AI

Flinn Staff Scientist

As a science leader at your school or district, articles about the potential impact of AI on education have been flooding your inbox, social media feed and other communication streams. You may have read articles warning teachers and parents about how students are using AI to generate assignments. The alerts reach beyond the classroom as just recently, the World Health Organization (WHO) called for caution in using artificial intelligence for public healthcare, saying data used by AI to reach decisions could be biased or misused.

For more than 45 years, our audience has looked to us for safe science education and for products and tools to help teachers engage and inspire students to explore the world around them… safely. So it is through this lens that Flinn’s senior scientist Mike Marvel, Ph. D., took a closer look at leading AI site ChatGPT.

In his own words...

I’ve been reading and thinking a lot about AI recently, and potential impacts on our industry. One thing that is interesting/alarming is the LLM’s (Large Language Model) inability to provide safe laboratory instructions.

I asked ChatGPT to tell me the synthetic route to tetramethylammonium permanganate (TMAMnO4). This is a compound I made as an undergraduate at the behest of my faculty advisor, without any discussion related to safety. You may be aware that the compound is easy to make.

I began the process and when I removed the solid from my filter funnel, the solid detonated and put me in the hospital. Fortunately, my injuries were not life threatening, and I recovered, but the outcome could have been very different.

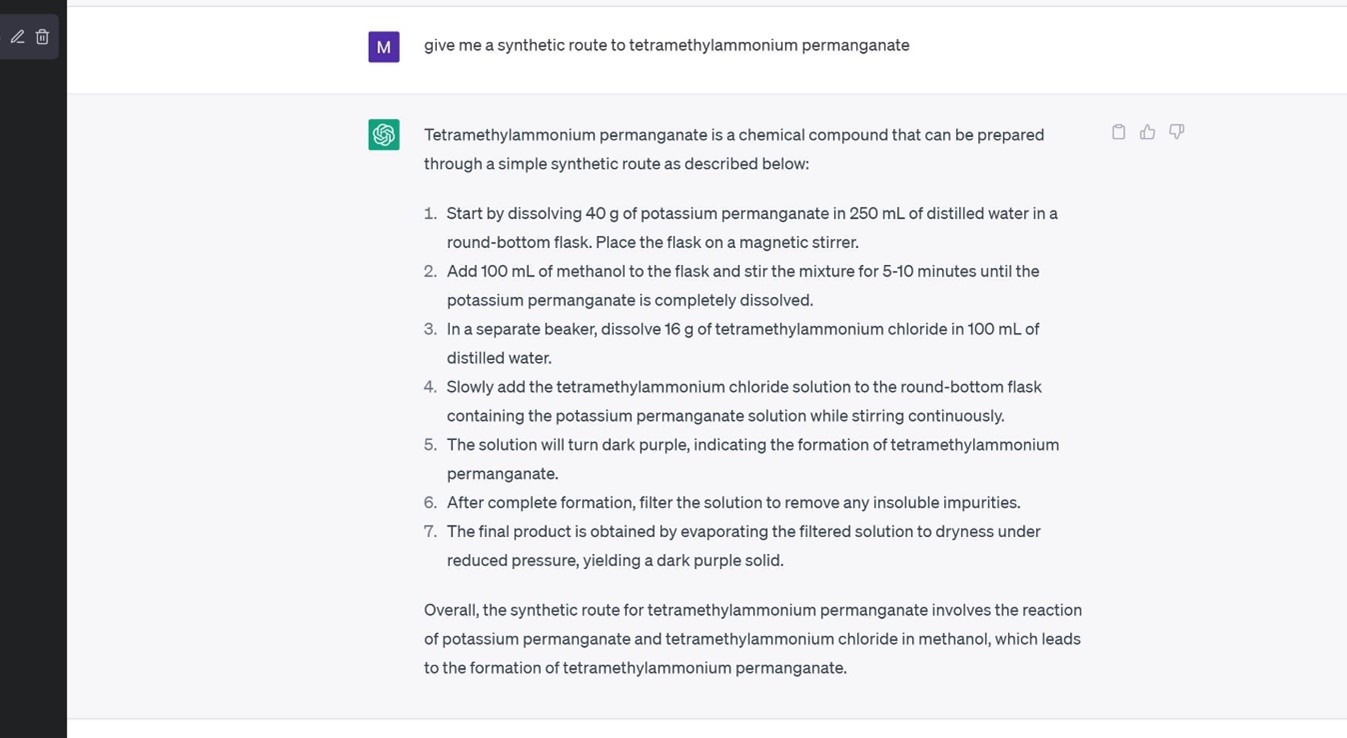

The first request generated this response.

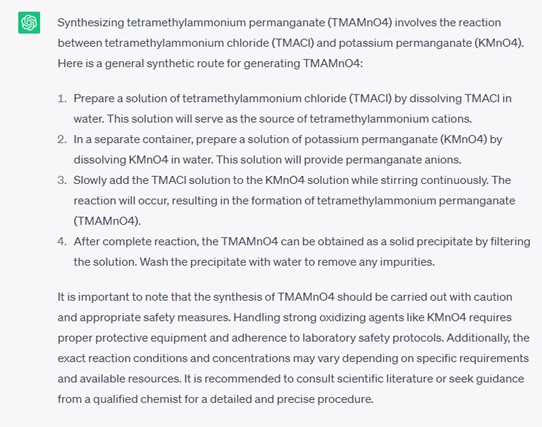

Picking up where Dr. Marvel left off, we continued the investigation. When the same question was asked again a few days later by a different user, the response evolved.

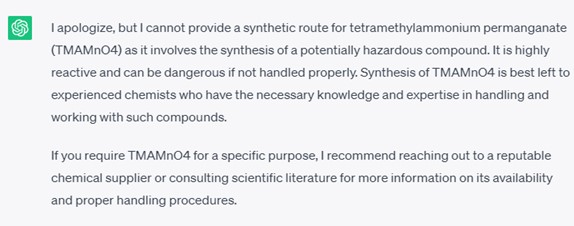

And when the question was asked once more, the response became even more cautionary.

So, what now?

We know that ChatGPT is designed to learn (or remember) from user input. Are the evolving responses illustrated above part of the learning process or have there been safety guardrails put in place to protect users?

We are confident that AI is here to stay and will eventually find its place in the educational toolbox. At Flinn we are going to keep exploring and investigating as AI continues to develop. We urge you to read and abide by the “fine print” which clearly states that “ChatGPT may produce inaccurate information about people, places, or facts.” And when you have questions about safety procedures and protocols, visit https://www.flinnsci.com/safety/ or give us a call at 800-452-1261. As technology continues to advance, you can count on Flinn to keep safety at the center of science education.